Vertical scaling in Amazon RDS involves changing the instance type to one with different computational, memory, and storage resources. Here’s a step-by-step guide for vertical scaling of an RDS instance running MySQL:

Preparation Steps:

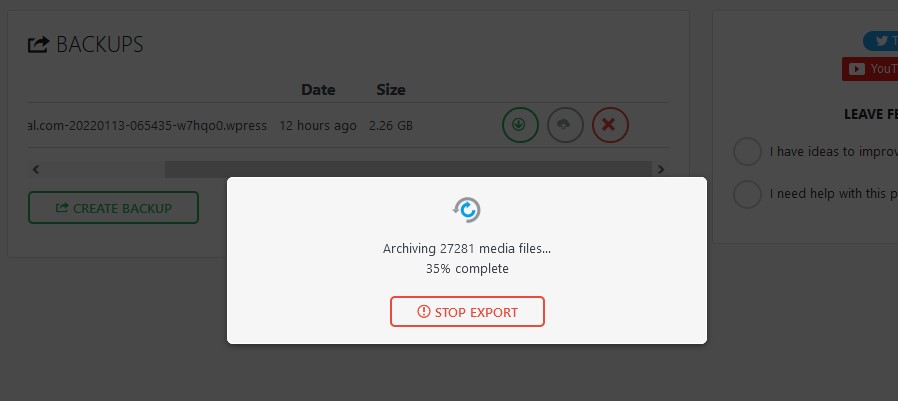

- Backup Data: Ensure you have a recent snapshot or backup of your database. Amazon RDS automated backups can be useful here.

- Maintenance Window: Identify a maintenance window where user impact will be minimal, as the scaling operation may result in downtime.

- Performance Metrics: Check your current resource utilization to select an appropriate instance type for scaling down.

- Test Environment: If possible, replicate the scaling process in a test environment to identify any potential issues.

Scaling Operation:

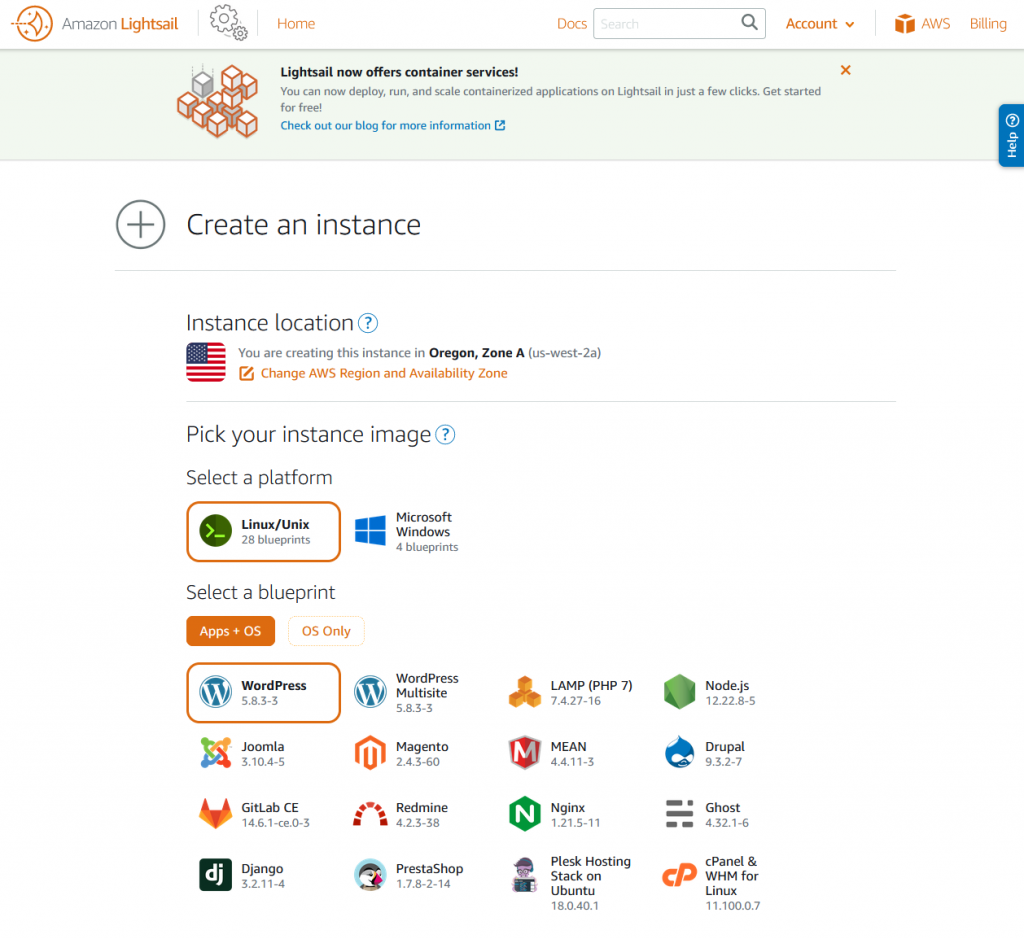

- Login to AWS Console: Navigate to the RDS section.

- Select Database: Go to the “Databases” tab and click on the DB instance that you want to scale.

- Modify Instance: Click on the “Modify” button.

- Choose Instance Type: Scroll down to the “DB instance class” section and select the new instance type you want to switch to.

- Apply Changes: You have two options here:

- Apply immediately: Your changes will be applied as soon as possible, resulting in immediate downtime.

- Apply during the next maintenance window: Your changes will be applied automatically during your next scheduled maintenance window, minimizing unplanned downtime.

- Confirm and Modify: Review the changes and click on the “Modify DB Instance” button to initiate the scaling operation.

Post-Scaling Steps:

- Monitor: Keep an eye on performance metrics to ensure that the new instance is operating as expected.

- Update DNS if Necessary: If the RDS endpoint has changed, update your application configurations to point to the new endpoint.

- Update Alarms and Monitoring: Adjust any CloudWatch Alarms or custom monitoring settings to suit the new instance type.

- Optimization: You might also need to optimize database queries or configurations to better suit the new hardware.

- Rollback Plan: Be prepared to rollback in case the new instance type does not meet your requirements.